Star-Planet Interactions Stars and planets interact in a variety of exciting and dynamic ways. Stars provide a crucial source of energy input to planets the form of both light and mechanical energy in the form of ionized stellar winds. This can heat the planet, ionize the upper atmosphere, irradiate the surface, and erode a planetary atmospher. These effects depend on the properties of the star as well as the properties of the planet.

I am interested in both the effects of a star on a planet as well as the coupling of the planet back to the star.

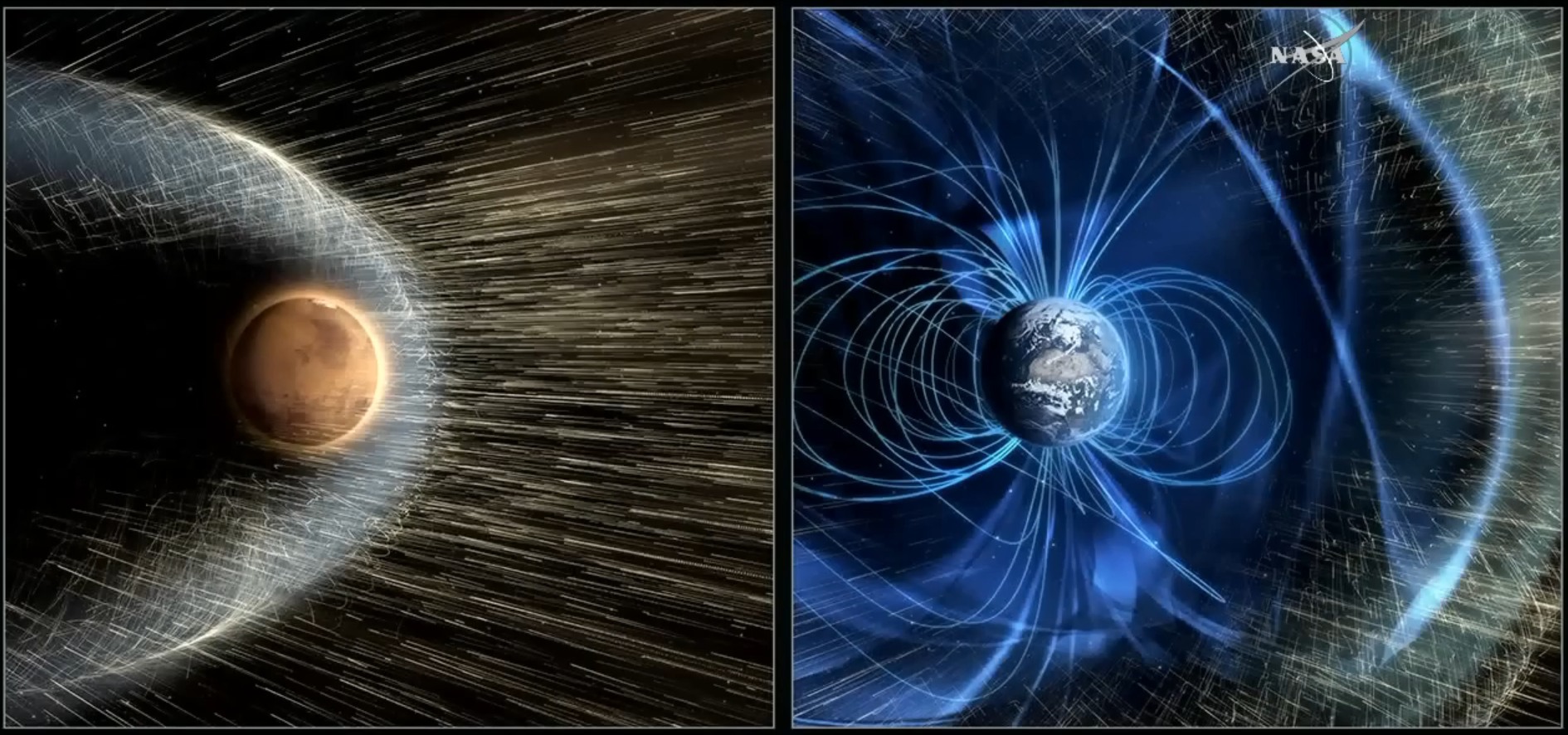

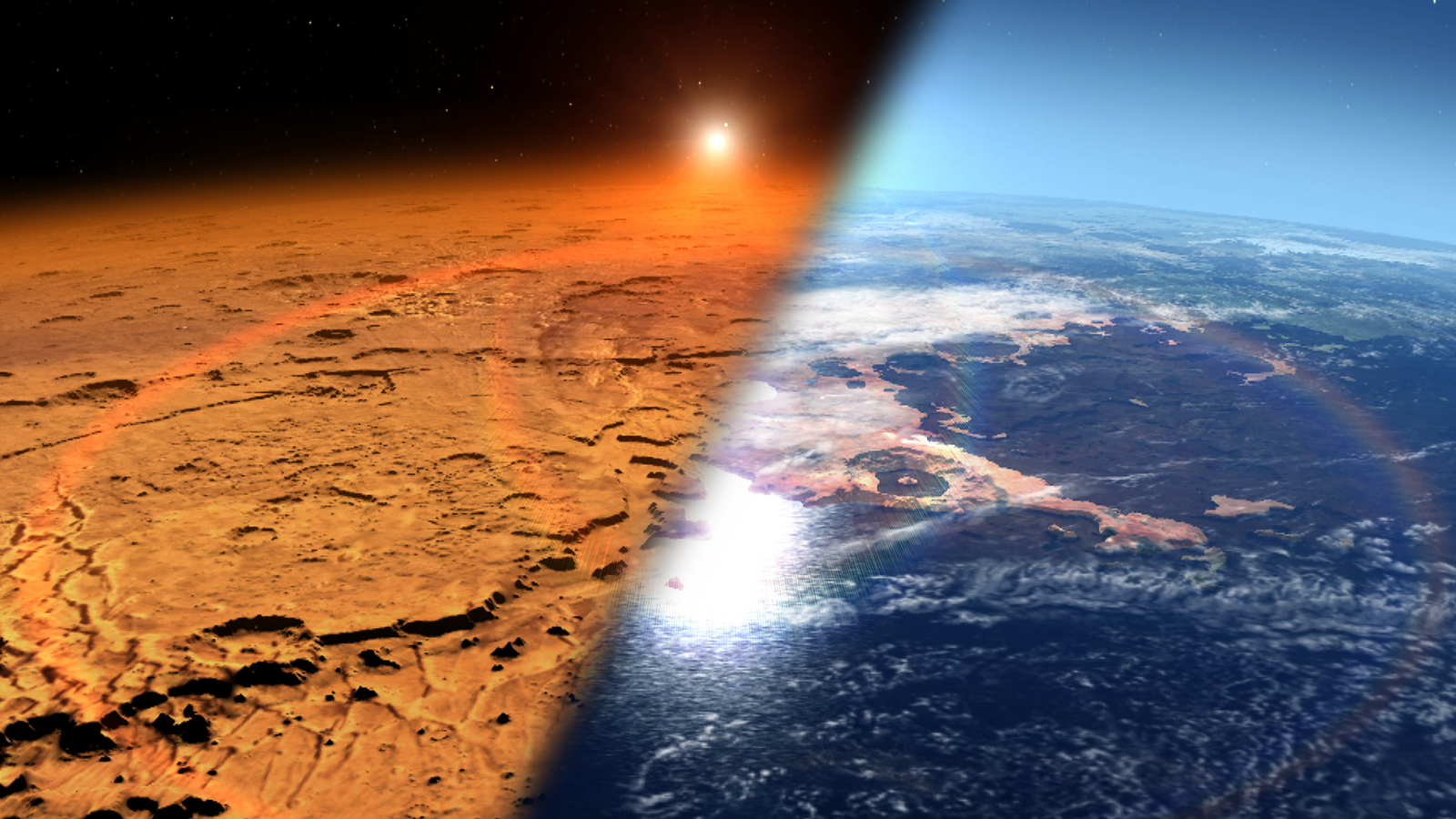

Atmospheric Escape and Evolution Planetary atmospheres play an important role in habitability and climate evolution, but are not necessarily stable over billions of years. Mars is thought to once have had atmosphere and liquid surface water, but is now a dry planet with very little atmosphere left. Venus may once have been a more Earth-like planet that boiled away all of its water due to a runaway greenhouse effect. Even Earth loses atmosphere through its polar regions due to the interaction of the magnetic field with the solar wind. A variety of planetary processes impact the longterm evolution of a planet (sequestration, outgassing), but I am primarily interested in loss to space.

Atmospheric loss processes take a variety of forms, but in general a particle escapes when it has energy that exceeds the escape velocity of the planet near the top of the atmosphere. In thermal loss processes this energy source is from inherent thermal motion, whereas in ion escape this energy comes from the interaction of the charged particles with electric fields. These processes can be observed at solar system bodies (Earth, Mars, Venus, Titan), modeled, and applied to exoplanets.

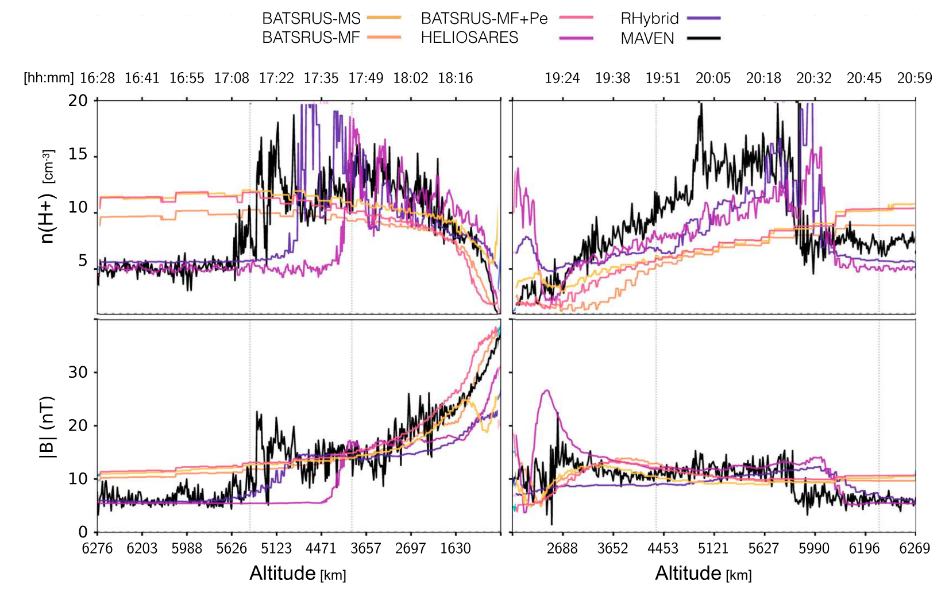

Connecting Simulations and Observations As simulations get larger and more sophisticated, it is increasingly important to pay attention to the techniques used to connect the results back to observations. I enjoy thinking about novel ways to compare simulations and observations, make predictions, and ground models in reality.

Above: Flythrough of model results of the inbound and outbound portions of the orbit (#2349, 14 December 2015) excluding periapsis with corresponding MAVEN data. Via "Comparison of Global Martian Plasma Models in the Context of MAVEN Observations (Egan et al. 2018).

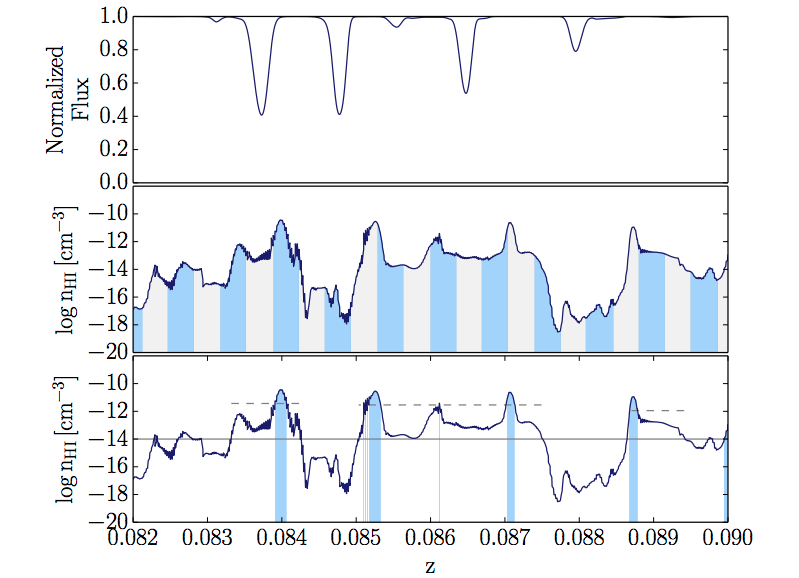

An older project I worked involved characterizing the distribution of matter is large-scale cosmological simulations. Observationally this characterization is accomplished using quasar absorption spectra that probe the interstellar medium along the line of sight, while in simulations we have access to the entire simulation volume.

I created a pipeline that generates and fits synthetic quasar absorption spectra using sight lines cast through a cosmological simulation, and simultaneously identifies structure by directly analyzing the variations in H I and O VI number density. This module is available through volumetric analysis package, yt. We found that the this method traces roughly the same quantities of H I and O VI above observable column density limits, but the synthetic spectra typically identify more substructure in absorbers.

An example absorption spectrum and methods of identifying structures in the intergalactic medium from a cosmological simulation. Via "Bringing Simulation and Observation Together to Better Understand the Intergalactic Medium" ( Egan et al. 2014)

High Performance Scientific Computing and Visualization

Machine Learning Through the DOE Computational Science Graduate Fellowship I had the opprotunity to spend a semester at the National Renewable Energy Lab (NREL), expanding the breadth of my PhD research. I took the oppotunity to work on a project outside of astrophysics in order to learn to use machine learning techniques that I hope to bring to my main research focus in the future.

HPC centers are designed to perform at maximum computational efficiency at all times, regardless of the energy needs of the rest of the building. This is a sub- optimal design, as not all jobs consume power equally and the energy load of a building can vary drastically throughout the day. I used data collected from NREL’s super-computing system, Peregrine, to characterize jobs and assess the feasibility of power-constrained scheduling.

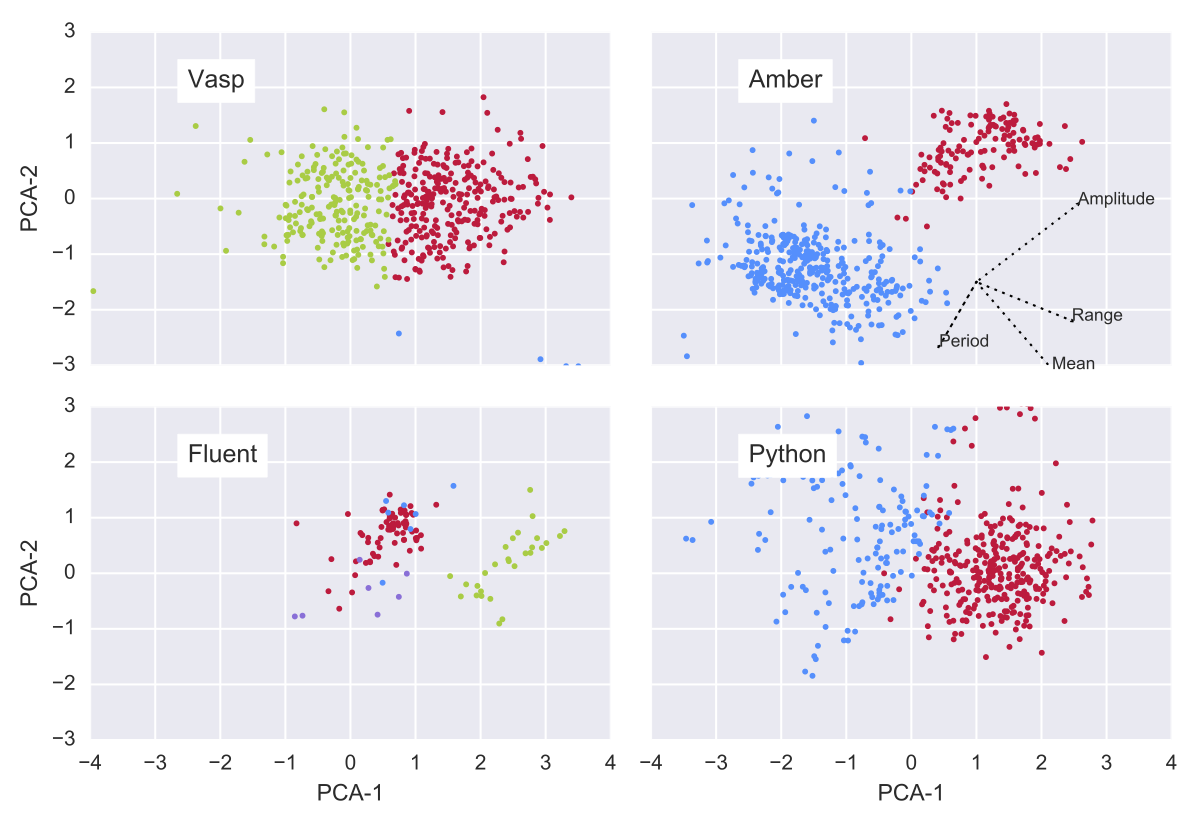

Every 10 seconds each node in the system is queried and current power consumption is recorded. This data is then cross correlated with a complete record of job submission scripts, start times, and end times. From each job’s power time series, a variety of descriptive metrics were calculated and were used for the remainder of the analysis. I used ML techniques to cluster jobs across a variety of application types and predict mean power use for every job, broken down by application. Using these predicted power metrics I developed a job secheduler that attempts to cap the peak power use of the total system.

Clustering jobs using a single applications (Vasp, Fluent, Python, Amber) into different types based on derived power use metrics (amplitude, period, range, mean) via principle component analysis. This works well for some programs (e.g. Amber) but poorly for others (e.g. Vasp) Related Paper: Prediction and characterization of application power use in a high-performance computing environment